A wide range of new accessibility features are added

Google officially introduced Live Caption with Android 10, a headline feature that generates texts from videos, podcasts or audio messages on your phone. The company has been expanding the capabilities of the service ever since and is currently using the same technology to operate Live Translate. Now that it’s been three years since Google first announced its solution, Apple is introducing something similar for iPhones along with a number of other accessibility tools.

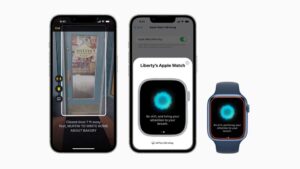

Live Captions comes to iPhone, iPad and Mac with similar features as its Android counterpart. This means automatically generating on-screen text from any audio content – such as a FaceTime call, a social media app or streaming content – so that hearing-impaired users can more easily follow conversations.

The display of captions will be quite flexible, allowing users to adjust the font size to their preference. With FaceTime calls, the system will automatically distribute transcripts to attendees, and calls on Macs will support a text-to-voice answering component. Live captions on iPhones will be generated on the device just like on Android, so Apple can not listen to any of this.

Meanwhile, Apple is also introducing an exciting door detection feature for the blind and visually impaired. Door Detection will use the iPhone’s LiDAR scanner, camera and machine learning to locate a door when a user arrives at a new destination, find out its distance and note whether it is open or closed. Since LiDAR is a prerequisite for the feature, it will likely be limited to the iPhone Pro 12 and 13 models and some iPad Pros.

Apple has a host of other accessibility features it showcases, including the ability to read signs and symbols around doors. We also get new portable features such as mirroring that allow users to control their Apple Watch with the iPhone’s help features (like Switch Control and Voice Control) and over 20 additional VoiceOver languages, including Bengali and Catalan.

Read Next

About the author