Apple’s “communication security in messaging” feature, designed to automatically blur images containing nudity sent to children using the company’s messaging service, is now rolling out to more countries. Following its launch in the US last year, the feature now comes to Messages apps on iOS, iPadOS and macOS for users in the UK, Canada, New Zealand and Australia. The exact times are unclear, however The Guardian reports that the feature is coming to the UK “soon.”

Scanning is done on the device and does not affect end-to-end encryption of messages. Instructions on how to enable the feature, which is integrated with Apple’s existing Family Sharing system, can be found here.

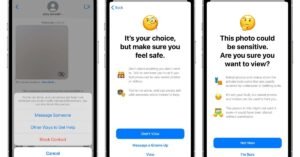

The opt-in function scans incoming and outgoing images for “sexually explicit” material to protect children. If it is found, the picture will be blurred and guidance will be given to find help along with assurances that it is ok not to see the picture and leave the conversation “You are not alone and can always get help from someone you trust , or with trained professionals, “the pop-up message reads.” You can also block this person. “

Similar to the original release in the US, children will have the option to send messages to an adult they trust about a flagged image. When Apple originally announced the feature in August last year, it suggested that this announcement would happen automatically. Critics were quick to point out that this approach was risky excursion queer children to their parents and could otherwise be abused.

Apple is also expanding the rollout of a new feature to Spotlight, Siri and Safari searches that will point users to security resources if they search for topics related to child sexual abuse.

In addition to these two child safety features, Apple originally announced a third initiative last August that involved scanning photos of child sexual abuse material (CSAM) before uploading to a user’s iCloud account. However, this feature received intense backlash from privacy advocates, who claimed that it risked introducing a back door that would undermine the security of Apple users. The company later announced that it would delay the rollout of all three features while addressing concerns. After releasing the first two features, Apple has yet to provide an update on when the more controversial CSAM detection feature will be available.